|

I completed my MSc in Machine Learning at the University of Tübingen in 2024, during which I worked on visualising figurative speech at the Computer Graphics Group, which led to an Outstanding Paper award at EMNLP 2023. Before starting my master's, I used to be a Computer Vision Researcher at the Center of Artificial Intelligence,ZHAW, working on domain adaptation in Optical Music Recognition. I have also worked with Dr. Daniel Lin Wen-Yan at SMU on feature correspondence-based object tracking. I completed my BSc in Electrical and Electronics Engineering in Manipal/Singapore. I am very eager to collaborate on relevant projects, so please reach out if you are interested! Email / CV / Google Scholar / Github / Twitter / Bluesky / LinkedIn / YouTube |

|

Recent News

|

|

Mar 2023 - Sep 2023: Research Assistant at the Computer Graphics group, Tübingen AI Centre. |

|

|

|

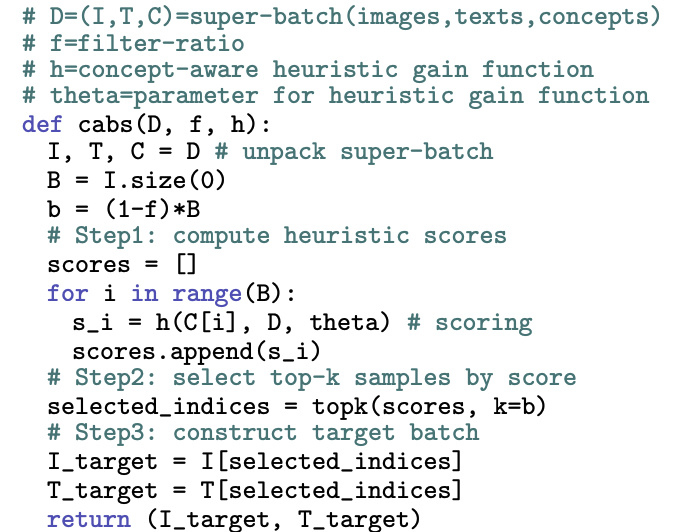

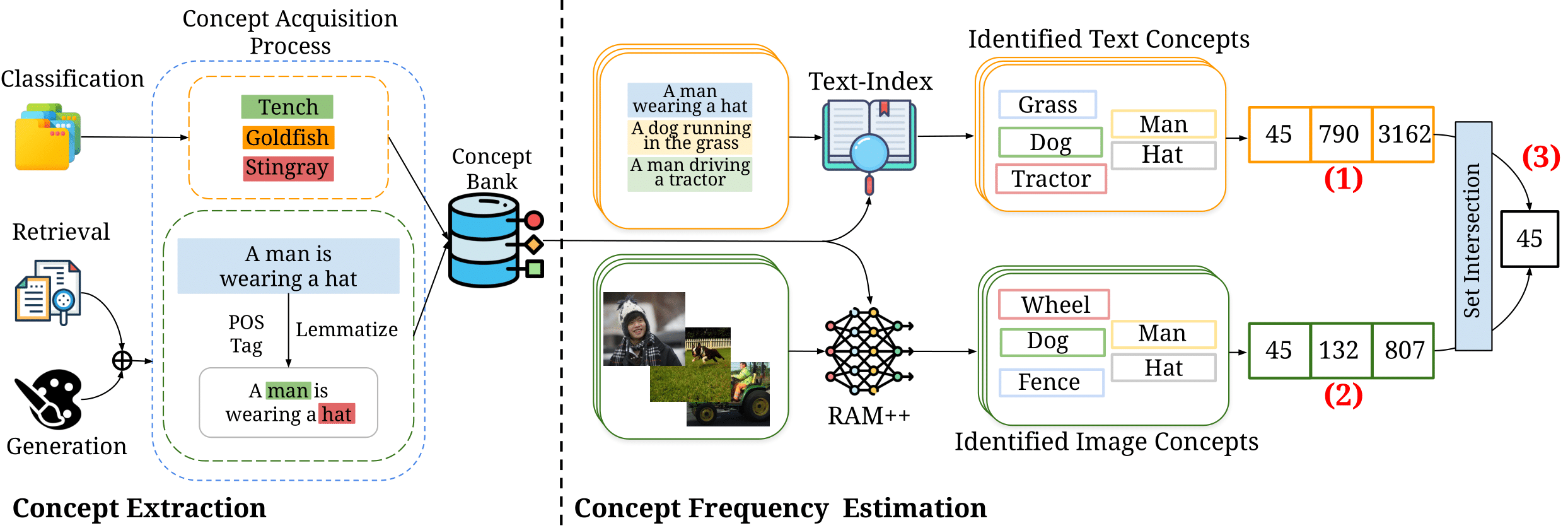

Adhiraj Ghosh, Vishaal Udandarao*, Thao Nguyen*, Matteo Farina*, Mehdi Cherti, Jenia Jitsev, Sewoong Oh, Elisa Ricci, Ludwig Schmidt, Matthias Bethge. arXiv:2511.20643, 2025 Paper In this work, we show that concept-aware data curation and online batch sampling improves the downstream performance of contrastive vision-language models. We introduce DataConcept, 128M image-text pairs annotated with concept-centric information, and Concept-Aware Batch Sampling (CABS), a framework to use concept information to curate batches online instead of static curation. |

|

Adhiraj Ghosh*, Sebastian Dziadzio*, Ameya Prabhu, Vishaal Udandarao, Samuel Albanie, Matthias Bethge. ACL 2025 (Main) Paper To evaluate the vast capabilities of foundation models, we introduce ONEBench – a benchmark that unifies individual test sets into a vast pool of individual data-measurement samples. We shift the focus from singular test-sets to sample-level evaluations, re-structuring static benchmarks to accommodate an ever-expanding pool of datasets and models. |

|

Vishaal Udandarao*, Ameya Prabhu*, Adhiraj Ghosh, Yash Sharma, Philip H.S. Torr, Adel Bibi, Samuel Albanie, Matthias Bethge. NeurIPS 2024 Paper / Code / Let It Wag! Benchmark The impressive empirical performance of VLMs is attributed to test concepts within their pretraining datasets, thus not showcasing "zero-shot" generalization. Instead, they need exponentially more data on a concept to linearly improve performance. |

|

Hassan Shahmohammadi, Adhiraj Ghosh, Hendrik Lensch. EMNLP 2023 (Outstanding Paper Award) Paper / Code / Dataset / HuggingFace / Music Videos ViPE is the first automated model for translating any arbitrary piece of text into a visualisable prompt. It helps any text-to-image model in figurative or non-lexical language visualisations. |

|

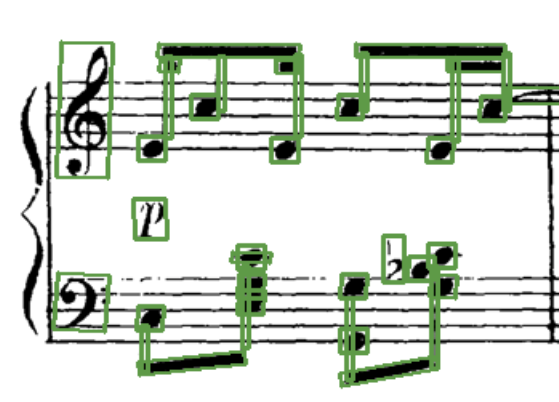

Adhiraj Ghosh*,Lukas Tuggener*, Raphael Emberger*, Pascal Sager*, et al. TISMIR 2023 Paper / Code We present solutions to improve recognition accuracy in Music Object Recognition on low-quality, real-world music sheet data and provide confidence-rated model outputs to enable efficient human post-processing. |

|

Adhiraj Ghosh, Kuruparan Shanmugalingam, Wen-Yan Lin WACV 2023 Paper / Code / Video / Poster We propose a new, feature-guided triplet mining scheme for understanding intrinsic pose to solve the intra-class variance problem in re-identification datasets. |

|

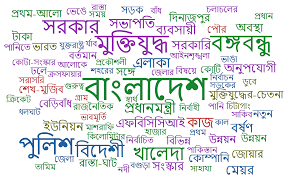

Adhiraj Ghosh, Kamal Sarkar ICCIDS 2020 Paper / Dataset This paper presents the description of the Bengali irony detection dataset developed by us and reports results obtained on our Bengali irony dataset using SOTA machine learning methodologies. |